Five for Friday: Issue #4

On OpenAI's whirlwind week, Meta Connect, the torpedoing of California's SB1047 AI Bill, Liquid AI's new Liquid Foundation Models, and further Microsoft Copilot updates

If you've been feeling like the AI world's been on a caffeine binge this week, you are not alone. Since coming back from vacation last week, I've been drowning in a tsunami of AI updates, desperately trying to keep my head above the digital waters. So grab your floaties, and let's dive into this week's Five for Friday!

#1 OpenAI's Whirlwind Week

How do I even start to summarise the OpenAI headlines from this week?

The team at OpenAI were probably playing a game of "How Many Headlines Can We Generate in a Week?" And boy, are they winning.

Late last week, ChatGPT's "Advanced Voice Mode" finally escaped the confines of beta testing and is now available to all US subscribers. With five new voices to choose from, you can now have your AI assistant sound like anything from a soothing NPR host to an overexcited game show announcer. However, Advanced Voice Mode is not yet available in the EU and a few other European countries. The feature technical runs afoul of the ban on emotion detecting technology imposed by the EU AI Act.

OpenAI's DevDay took place and introduced four new developer focused features:

Prompt Caching: Offers a 50% discount on recently processed input tokens, potentially reducing costs for developers significantly.

Vision Fine-Tuning: Ability to customise GPT-4o's visual capabilities, opening up new possibilities in fields like autonomous vehicles and medical imaging.

Realtime API: Allows for voice-based and speech-to-speech applications, for instance, voice controls for apps, or even voice-capable customer service chatbots.

Model Distillation: Helps smaller companies leverage advanced model capabilities more efficiently

The company closed a massive $6.6bn funding round valuing the business at $157bn. Investors included Microsoft and Nvidia, and a host of VCs. Interestingly, Apple pulled out from the talks at the last moment. OpenAI’s financial triumph was partially overshadowed by the departure of key team members. CTO Mira Murati, VP of Research Barret Zoph, and Chief Research Officer Bob McGrew all announced their exits, raising questions about the company's stability and future direction.

And just when you thought they were done, OpenAI yesterday introduced Canvas, a new interface for ChatGPT designed to enhance collaboration on writing and coding projects. Canvas opens in a separate window, allowing users to work alongside ChatGPT beyond simple chat interactions. It offers features like inline feedback, targeted editing, and specialized shortcuts for writing and coding tasks, making it easier for users to refine and develop their work with AI assistance.

Perspectives:

From a consumer perspective, I’m most excited about Canvas. The ability to work more seamlessly across and within projects, without having to cut and paste text from ChatGPT into other applications will be helpful, and will enable OpenAI to compete even more effectively with Google and Microsoft, whose AI models are already embedded into their productivity suites.

Amidst the backslapping arising from OpenAI’s latest funding round, DevDay, and new consumer products, the company’s continued hemorrhaging of founders and key executives alongside the watering down of its safety team and capabilities is becoming increasingly concerning.

#2 AR You Kidding Me? Meta's Latest AI Extravaganza

Meta reveals AR and VR products, celebrity voices for its AI, and more

Meta Connect 2024 represents the digital fever dreams of everyone’s most trusted social media baron, Mark Zuckerberg.

The Orion Augmented Reality (AR) glasses stole the show but is a prototype for now and several years away from being available to consumers. In fact, each pair of glasses is said to currently costs ~US$10,000 to produce. Orion’s hardware consists of three parts: the glasses, a “neural wristband” for controlling them; and a small wireless computing device that has to be kept within 12 feet of the glasses.

Next up, were the updated Ray-Ban smart glasses. Beyond being able to take pictures and video, and make calls, the glasses are now equipped with Meta AI, allowing one to for instance, record and send voice messages on WhatsApp and Messenger. Let’s not forget a new "Reminders" feature, because apparently, we all need another device to nag us about our to-do lists. Yours for only US$299.

Other innovations included the Quest 3S VR headset at a wallet-friendly $299.99, the celebrity voices for Meta’s AI assistant. If you've ever wished for John Cena or Awkwafina to remind you to buy milk, you're in luck.

But wait, there's more! Meta is testing AI-generated images in your social media feeds, because apparently, your friends' carefully curated vacation photos weren't making you jealous enough.

Finally, and for the developers out there, Meta introduced Llama Stack, a comprehensive suite of tools designed to simplify AI deployment across a wide range of computing environments, potentially offering a full turnkey solution for all aspects of AI model and application development.

Perspectives:

Meta’s various consumer gadgets appeal to only the most early- and tech-obsessed adopters. And the use of automated AI-generated images in social media feeds frankly feels dystopian to me.

The launch of Llama Stack is potentially the most significant and interesting announcement. Meta’s AI strategy is a long-term one and centred on its open source Llama models, which the company has until now not yet come close to monetising. This new integrated stack is part of the company’s push to place is open source models at the heart of enterprise AI development. Once a critical mass of adoption is reached, and following in the time honoured footsteps of other open source pioneers (e.g., MongoDB, Red Hat, Docker), monetisation will be in the form of services and ecosystem access.

#3 404 Error: AI Regulation Not Found in California

California’s Governor Newsom vetoes landmark AI safety legislation

In a move that has some applauding but that will also surely ruffle feathers, California Governor Gavin Newsom vetoed SB1047, the state's ambitious AI safety bill.

The legislation, which aimed to establish first-in-the-nation regulations against AI misuse, found itself caught in the crossfire of a heated tech industry debate.

Newsom explained his actions by saying that the bill was “well-intentioned” but “does not take into account whether an AI system is deployed in high-risk environments, involves critical decision-making or the use of sensitive data.”

SB1047 would have required AI companies with deep pockets – those spending over $100m to train models or $10 million to fine-tune them – to implement safety measures and protocols to reduce risks of catastrophic events.

The bill managed to do something previously thought impossible: it united Elon Musk and Anthropic on one side, with Google, Meta, and OpenAI on the other. Talk about strange bedfellows.

Perspectives:

Opponents of SB1047 believed that it would stifle progress. Given how some tech giants are tiptoeing around Europe's AI Act like it's a minefield, they might have a point. But it begs the question: Is pedal-to-the-metal progress always the best route, or should we occasionally check the map?

Let's also not forget the elephant (or should we say, the tech giant) in the room - the immense influence of Silicon Valley's lobby, which can apparently move mountains, or at least Governors.

#4 Liquid AI Makes a Splash

MIT spinoff develops state of the art non-Transformer models

Liquid AI, a startup co-founded by former MIT Computer Science and Artificial Intelligence Laboratory (CAIL) researchers, has just unveiled its first series of multimodal AI models, dubbed Liquid Foundation Models (LFMs).

Instead of being based on the dominant Transformer architecture that powers most of today's Large Language Models (LLMs), LFMs are built from the ground up using “principles from dynamical systems, signal processing, and numerical linear algebra”.

Full disclosure: Those terms read like an alien language to me. So, I enlisted Claude's help to break it down into something more digestible:

Dynamical Systems: A branch of mathematics that studies how systems change over time. In AI, it can help create models that adapt and evolve based on input.

Signal Processing: Involves analysing and modifying signals, such as audio or data streams. In AI, it can help with efficient data handling and feature extraction.

Numerical Linear Algebra: Relates to solving mathematical problems involving matrices and vectors efficiently. In AI, it's crucial for optimising computations and reducing memory usage.

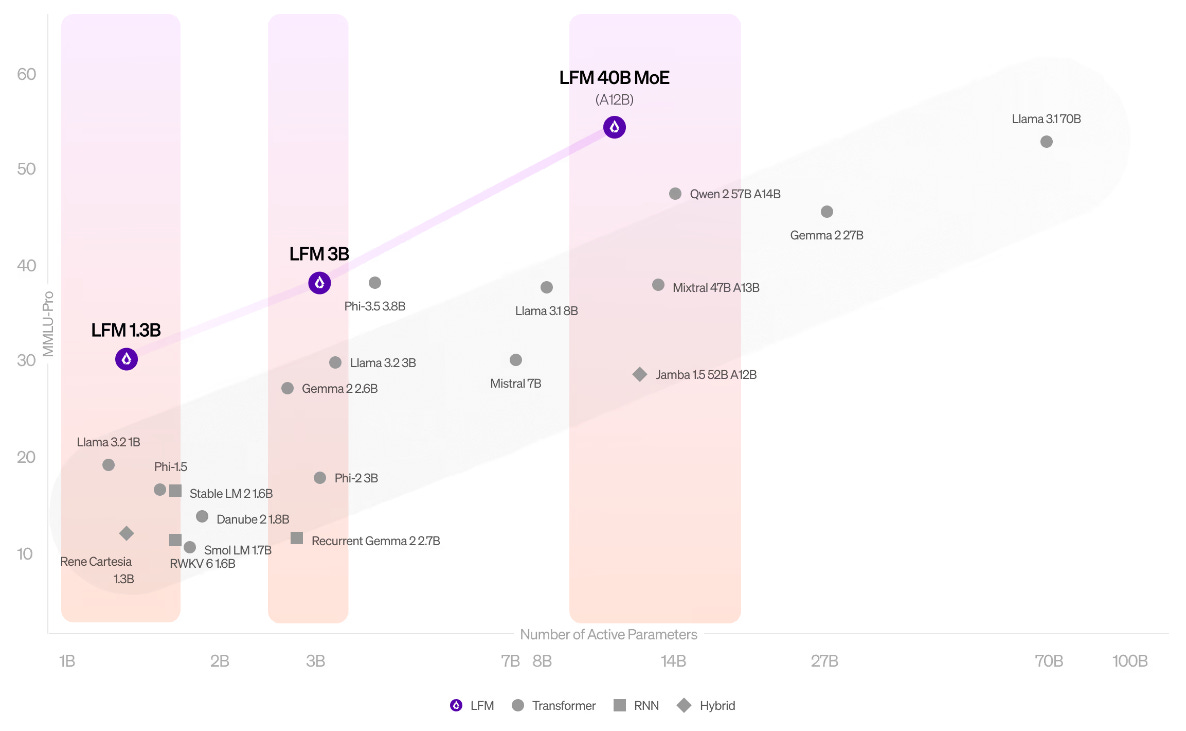

These new LFMs come in three flavors: LFM-1.3B, LFM-3B, and LFM-40B MoE. Early benchmarks already show these models outperforming their Transformer-based cousins while using significantly less memory.

Perspectives:

While not the biggest news this week, I think LFMs hold significant promise for helping to push back the boundaries of traditional LLMs.

Their significantly higher efficiency also has important implications for energy consumption and therefore the environment, as well as the ability to be deployed on edge computing devices, in other having AI installed independently on device hardware rather than in the cloud.

#5 Copilot's Makeover, Redux

Microsoft’s AI Assistant Learns to Talk, See, and Think Deeper

Microsoft is rolling out significant updates to Copilot, its AI platform for Windows 11, introducing new features that aim to make the assistant more intuitive and useful.

Copilot Voice allows for more natural conversations with the AI. Users can choose from four different voices, and the AI even throws in verbal cues like "cool" and "huh" to seem “more engaged”. Microsoft has apparently hired "an entire army" of creative directors – among them psychologists, novelists and comedians for this. Hmmm.

Copilot will also be getting a pair of digital eyes. The new Copilot Vision feature allows the AI to understand and interact with whatever you're looking at on your screen in real-time. Whether shopping online or researching for a project, Copilot can now offer insights and suggestions based on what it "sees." I’m not sure if this seems a little too much like having that “smart” friend looking over your shoulder, and constantly chiming in with “helpful” comments.

Subscribers to Copilot Pro will also have access to a new Think Deeper feature, which is designed to reason through choices such as choosing a city to move to or deciding on the perfect car.

Rounding out the updates are Copilot Daily, which delivers personalised news and weather summaries, and Click to Do, a feature that offers quick actions based on screen content.

Perspectives:

These updates come hot on the heels of other Copilot updates, including Copilot Pages, Copilot in Excel, and Copilot Agents, which we covered two weeks ago.

While most of these features are simply Microsoft playing catch-up with OpenAI and other competitors, Copilot Vision is significantly novel enough to stand out from the crowd. With the AI being able to “see” what we see, human-AI collaboration should become a lot smoother and more versatile.

Microsoft also appears to have learnt from Windows Recall debacle, which it pulled in June of this year due to privacy concerns. This time, the company assures users that Copilot Vision will be entirely opt-in only with none of the data being stored or used for AI training.

Justin Tan is passionate about supporting organisations and teams to navigate disruptive change and towards sustainable and robust growth. He founded Evolutio Consulting in 2021 to help senior leaders to upskill and accelerate adoption of AI within their organisation through AI literacy and proficiency training, and also works with his clients to design and build bespoke AI solutions that drive growth and productivity for their businesses. If you're pondering how to harness these technologies in your business, or simply fancy a chat about the latest developments in AI, why not reach out?